OpenAI has rolled out a new age-prediction system for ChatGPT, aiming to limit younger users’ exposure to sensitive content. The update, announced in September, adds a layer of safeguards that estimate a user’s age based on behavior and account activity. If the system misclassifies a user as under 18, a separate verification process is available.

At a Glance

- OpenAI’s new feature predicts user age and restricts sensitive content for those likely under 18.

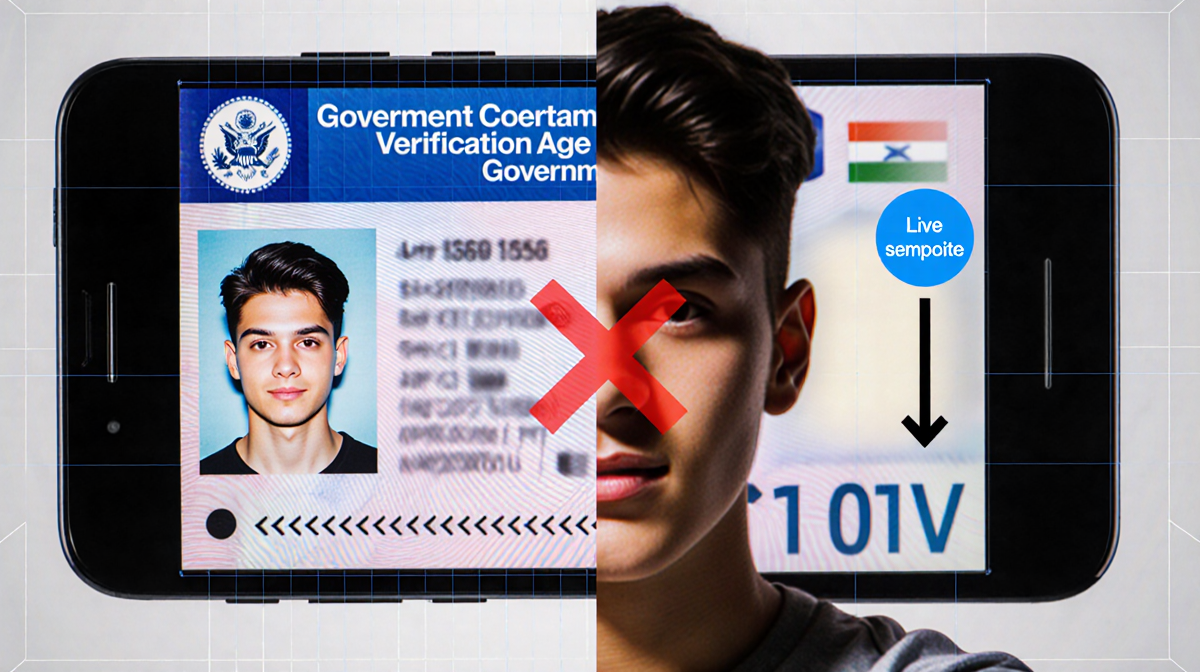

- Verification requires a live selfie and government-issued ID via Persona.

- The system targets graphic violence, self-harm depictions, risky challenges, sexual roleplay, and body-shaming content.

- Why it matters: Parents and regulators are pushing for stricter age controls across digital platforms.

How the Age-Prediction Works

OpenAI’s algorithm analyzes signals such as how long an account has existed, when the user is active, and behavioral patterns. These indicators feed into a model that outputs an age estimate. The system is designed to flag users who are likely 18 or younger and then block or filter content that could be harmful.

Verification Options

When the age-prediction misidentifies a user, the platform offers a verification pathway:

- Live selfie: The user takes a real-time photo.

- Government ID: The user uploads a scanned or photographed ID.

- Persona: OpenAI partners with the identity-verification service to confirm age.

A dedicated ChatGPT page directs users to this process, ensuring a smooth experience.

Content Filters

OpenAI lists several categories that the system blocks for under-age users:

- Graphic violence or gore

- Depictions of self-harm

- Viral challenges that could encourage risky behavior

- Sexual, romantic, or violent role-playing

- Promotion of extreme beauty standards, unhealthy dieting, or body shaming

These filters align with broader concerns about AI-generated content and its impact on teens.

Legal and Regulatory Context

The move comes amid multiple lawsuits and investigations. In April, Ziff Davis, News Of Los Angeles‘s parent company, filed a lawsuit alleging copyright infringement in OpenAI’s training data. The company also faces scrutiny over incidents involving teenagers who interacted with ChatGPT.

Recent regulatory trends include:

- Roblox’s mandatory age checks

- Australia’s new law banning social media for children under 16

- State-level proposals in the U.S. to enforce age verification across online services

OpenAI’s update reflects a growing industry shift toward age-based access restrictions.

Industry Response

Jake Parker, senior director of government relations at the Security Industry Association, praised the accuracy of modern verification tools. He noted:

“The US government performs an ongoing technical evaluation of such technologies through the National Institute of Science and Technology’s Face Recognition Technology Evaluation and Face Analysis Technology Evaluation programs,” Parker said. “These programs show that at least the top 100 algorithms are more than 99.5% accurate for matching, even across demographics, while the top age-estimation technologies are more than 95% accurate.”

Kristine Gloria, chief operating officer of Young Futures, cautioned that technology alone is insufficient. She stated:

“We know that generative AI presents real challenges, and families need support in navigating them,” Gloria said. “However, strict monitoring has its limitations. To truly move forward, we need to encourage safety-by-design, where platforms prioritize youth wellbeing alongside engagement.”

Challenges and Next Steps

The effectiveness of the age-prediction model remains untested at scale. With 800 million weekly active users, the system’s accuracy across diverse demographics is still uncertain. OpenAI plans to refine the model over time and may integrate additional safeguards.

Key considerations for the future include:

- Enhancing transparency around how age is estimated

- Expanding parental controls beyond age filters

- Collaborating with educators to promote digital literacy

- Monitoring the impact of these measures on user experience and safety

Key Takeaways

| Feature | Status | Notes |

|---|---|---|

| Age-prediction algorithm | Deployed | Uses behavioral signals to estimate age |

| Verification via Persona | Available | Requires live selfie + ID |

| Content filters | Active | Targets violence, self-harm, risky challenges, sexual roleplay, body shaming |

| Regulatory alignment | Ongoing | Meets emerging age-verification mandates |

| Accuracy claims | 99.5% for matching, 95% for age estimation | Based on industry studies |

OpenAI’s initiative represents a significant step toward protecting younger users on its flagship chatbot. While the technology shows promise, its long-term efficacy will depend on continued refinement, regulatory support, and a holistic approach to digital safety.

Future Outlook

Industry observers expect other AI platforms to follow suit, especially as lawmakers push for stricter age controls. The balance between user privacy, accessibility, and safety will remain a central debate.

—

Note: All statements reflect the information provided in the source material.