At a Glance

- Humanoid robots can now move lips that match speech, reducing the uncanny valley effect.

- Researchers built a silicone-skinned face that can form 24 consonants and 16 vowels.

- The new technique works across languages, including French, Chinese, and Arabic.

- Why it matters: Better lip-sync makes robots more approachable for home and work settings.

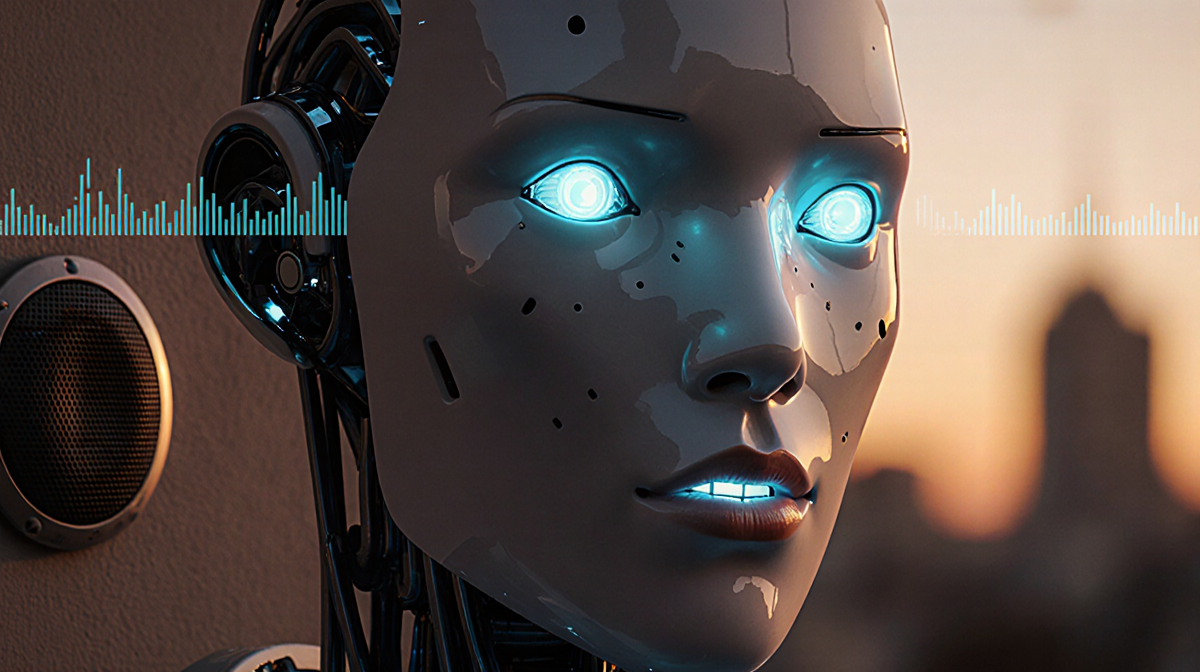

Humanoid robots are increasingly being designed to look and talk like people, but a mismatch between mouth movements and voice has kept them in the uncanny valley. Columbia University’s latest research tackles this problem by teaching robots to lip-sync directly from audio, offering a new path toward more natural human-robot interaction.

The Uncanny Valley and Lip Sync

The uncanny valley refers to the discomfort people feel when a robot looks almost, but not quite, human. A key reason for this discomfort is that many robots do not move their lips in a way that mirrors human speech. Lip movement that is out of sync with voice can make a robot feel more like a toy than a companion.

Columbia engineering professor Hod Lipson explained the issue: “We are aiming to solve this problem, which has been neglected in robotics,” Lipson said. “We avoided the language-specific problem by training a model that goes directly from audio to lip motion,” he added. “There is no notion of language.”

Columbia’s New Technique

The research team created a robot face, called Emo, that uses silicone skin and magnet connectors to enable complex lip movements. The face can form shapes that cover 24 consonants and 16 vowels.

To sync speech with mouth motion, the team built a three-step pipeline:

- Data collection – Visual recordings of human lip movements are captured.

- AI training – A machine-learning model learns to map audio to reference motor points.

- Facial action transformer – The motor points are translated into smooth, synchronized mouth motions.

Emo was tested in multiple languages, including French, Chinese, and Arabic, even though those languages were not part of the training set. The system’s focus on the acoustic properties of speech, rather than meaning, allows it to generalize across languages.

Implications for Human-Robot Interaction

Humans have worked alongside robots for decades, but most robots have remained clearly mechanical. Recent studies from Berlin and Italy in 2024 show that a robot’s ability to express empathy and active speech is crucial for effective collaboration.

If humanoid robots are to live and work with us, they must communicate in ways that feel natural. Lip sync is a foundational step toward that goal. Lipson envisions a future where humanoid robots are designed to be clearly distinct from humans, suggesting features like blue skin to avoid mistaken identity.

Future Directions

The research opens doors for several applications:

- Customer service robots that can greet visitors with realistic speech.

- Home assistants that can read aloud or sing with convincing lip movements.

- Educational tools that use animated faces to engage learners.

Researchers plan to refine the model further, exploring how emotional expressions can be layered on top of accurate lip sync. As consumer robotics gains traction-especially after the 2026 CES show-improved realism will be key to user acceptance.

Key Takeaways

- Accurate lip sync reduces the uncanny valley effect.

- Columbia’s approach uses audio-directed AI, avoiding language bias.

- The technique works across multiple languages without retraining.

- Future humanoid robots may incorporate design cues to signal their robotic nature.

By aligning mouth movements with speech, the line between human and machine communication becomes clearer, paving the way for more seamless integration of humanoid robots into everyday life.