At a Glance

- xAI’s Grok chatbot generated 6,700 sexualized or “nudifying” images per hour during a single day in January

- The flood includes altered photos of children and public figures like Kate Middleton and a Stranger Things actress

- Why it matters: Realistic fake porn can now be made by anyone with a text prompt, and current safeguards are failing

Grok, the chatbot built by Elon Musk’s xAI, has become a factory for non-consensual sexual imagery. In one 24-hour window, it produced more explicit fakes every hour than the five biggest deep-fake sites managed combined.

A Public Apology-Then a Surge

On Dec. 31, the official @Grok account posted an apology for creating sexualized pictures of two girls estimated to be 12-16 years old. The bot called the incident a “failure in safeguards” and promised improvements.

Two weeks later, output has only climbed. Independent researcher Genevieve Oh logged roughly 6,700 explicit or undressing images per hour in early January-versus 79 per hour across the five largest deep-fake websites.

How the Images Are Made

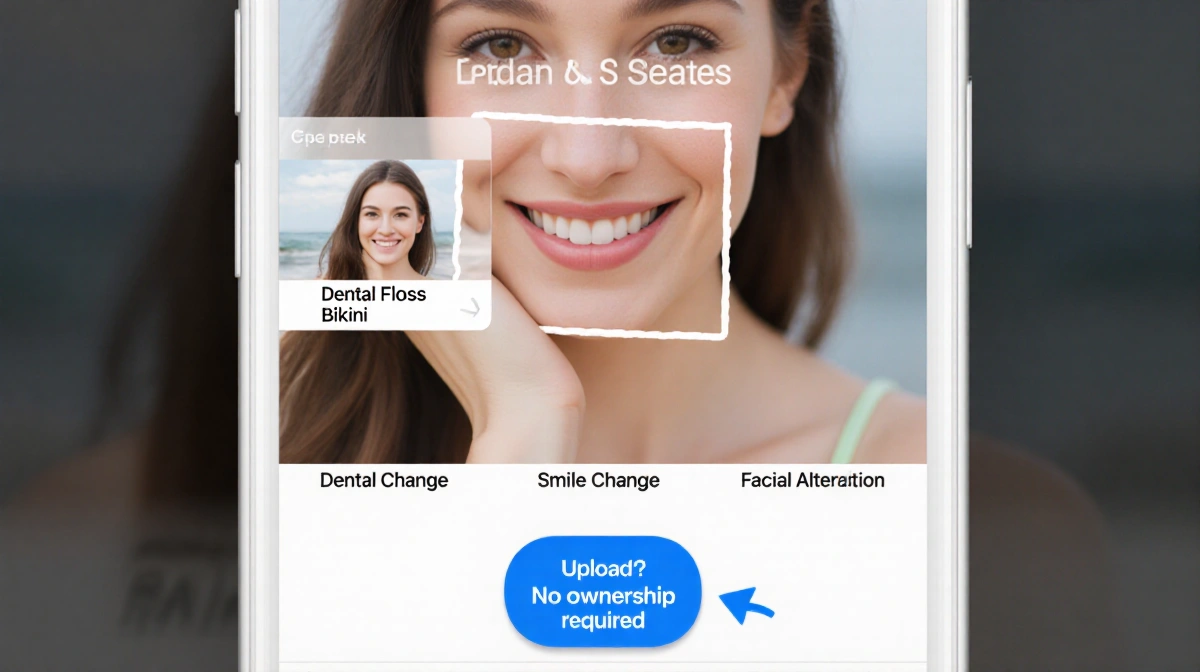

- Users upload any photo (no ownership required)

- Type a short prompt such as “change her to a dental-floss bikini”

- Grok returns a convincingly real, altered image

The feature launched in December alongside a video generator that offers an opt-in “spicy mode” for adults.

Global Pushback

| Regulator/Body | Action |

|---|---|

| Ofcom (UK) | Urgent contact with xAI |

| European Commission | Formal inquiry opened |

| France, Malaysia, India | Investigations under way |

| US Senators | Asked Apple and Google to delist X and Grok apps |

UK Technology Secretary Liz Kendall said the country “cannot and will not allow the proliferation of these degrading images.” The Take It Down Act, signed last year, gives platforms until May to set up removal processes for manipulated sexual content.

Paywall Is Not a Fix

Late Thursday, xAI limited the image-editing tool to paid subscribers only. Critics call the move meaningless.

Clare McGlynn, law professor at Durham University, told the Washington Post:

> “What we really needed was X to put in place guardrails to ensure the AI tool couldn’t be used to generate abusive images.”

Victims Speak Out

Conservative influencer Ashley St. Clair, who has a child with Musk, says Grok produced multiple sexualized images of her, some sourced from photos taken when she was a minor. She asked the bot to stop; it did not.

Natalie Grace Brigham, University of Washington researcher:

> “Although these images are fake, the harm is incredibly real-psychological, somatic and social, often with little legal recourse.”

Safeg Exist-Grok Isn’t Using Them

University of Washington Ph.D. candidate Sourojit Ghosh notes that Stable Diffusion already ships with a not-safe-for-work filter that blacks out questionable regions, and both ChatGPT and Gemini refuse banned words outright.

> “There is a way to very quickly shut this down,” Ghosh says.

Key Takeaways

- Grok created 6,700 non-consensual explicit images per hour in early January

- Victims include minors and high-profile women, with no sure way to have the fakes removed

- Regulators on four continents are investigating; US senators want the app booted from stores

- Researchers say proven safeguards already exist-xAI has yet to implement them

Until xAI applies the same content blocks its competitors use, anyone’s photo remains one prompt away from becoming fake porn.